Produced by Tiger Sniffing Technology Group

Author | Qi Jian

Editor | Chen Yifan

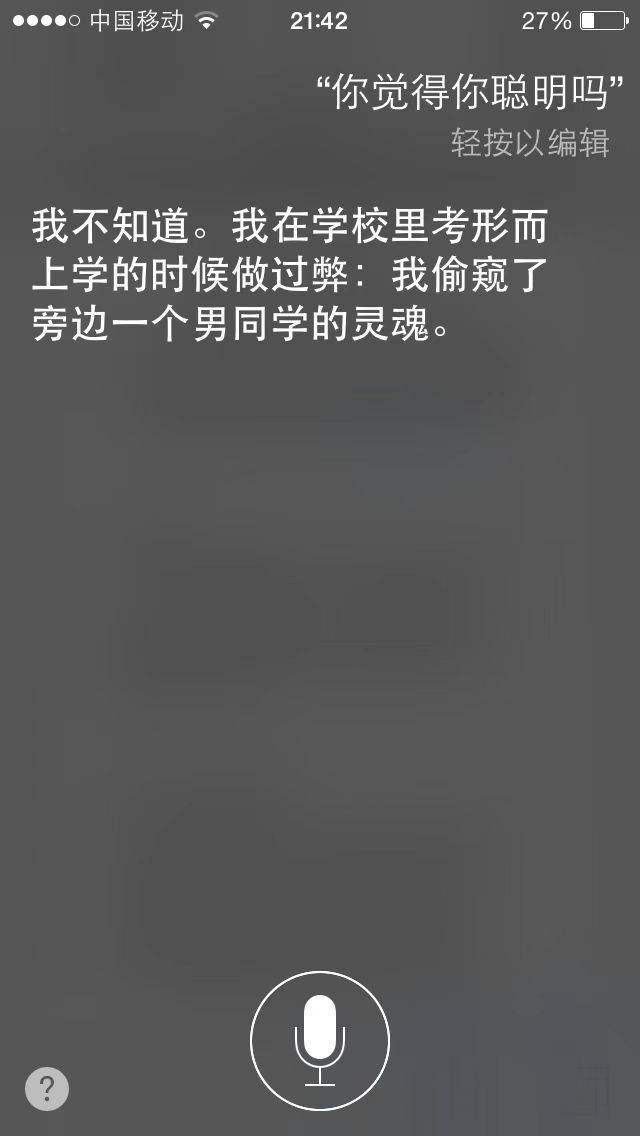

Head picture | |FlagStudio

"One morning, your AI assistant sent me an interview invitation, so I asked my AI assistant to handle it. The latter thing was done by two AI systems. After many rounds of dialogue between them, the date was finalized and the conference room was booked. There was no human participation in the whole process. "

This is Michael Wooldridge’s picture of the future. He is a British AI scientist and is currently a professor of computer science at Oxford University.

What will happen to our society when artificial intelligence can communicate with each other?

During the one-hour conversation, woodridge was very interested in this topic. He is one of the top scholars in the world in the research of multi-agent systems, and "collaboration between AI" is his key research direction.

In Wooldridge’s view, although artificial intelligence has become more and more like human beings and even surpassed human beings in some fields, we still have a long way to go from real artificial intelligence, whether it is AlphaGO, which defeated human beings, or ChatGPT, which answered like a stream.

When most people are immersed in the phenomenal innovation created by OpenAI, Wooldridge appears much calmer. ChatGPT shows the power of neural network, but also shows its bottleneck-it can’t solve the huge power consumption and computing power problem, and the unsolvable AI "black box" problem."Although the deep neural network can often answer our questions perfectly, we don’t really understand why it answers like this."

AI that surpasses human beings is often called "strong artificial intelligence", while AI with universal human intelligence level is called Artificial general intelligence (AGI).Wooldridge described AGI in his book "The Complete Biography of Artificial Intelligence": AGI is roughly equivalent to a computer with all the intellectual abilities possessed by an ordinary person, including the abilities of using natural language to communicate, solve problems, reason and perceive the environment, and it is at the same or higher level of intelligence as an ordinary person. The literature about AGI usually does not involve self-consciousness or self-consciousness, so AGI is considered as a weak version of weak artificial intelligence.

However, the "weak" AGI is far from the contemporary artificial intelligence research.

"ChatGPT is a successful AI product. It is very good at tasks involving language, but that’s all. We still have a long way to go from AGI. " In a conversation with Tiger Sniff, woodridge said that deep learning enables us to build some AI programs that were unimaginable a few years ago. However, these AI programs that have made extraordinary achievements are far from the magic to push AI forward towards grand dreams, and they are not the answer to the current development problems of AGI.

Michael Wooldridge is a leading figure in the field of international artificial intelligence. He is currently the dean of the School of Computer Science of Oxford University, and has devoted himself to artificial intelligence research for more than 30 years. He served as the chairman of the International Joint Conference on Artificial Intelligence (IJCAI) from 2015 to 2017 (which is one of the top conferences in the field of artificial intelligence), and was awarded the highest honor in the British computer field-the Lovelace Medal in 2020, which is regarded as one of the three influential scholars in the British computer field.

ChatGPT is not the answer to building AGI.

Before the appearance of ChatGPT, most people thought that general artificial intelligence was very far away. In a book entitled "Intelligent Architecture" published in 2018, 23 experts in the field of AI were investigated. When answering "Which year has a 50% chance to realize general artificial intelligence", Google Engineering Director Ray Kurzweil thought it was 2029, while the time given by iRobot co-founder Rodney Brooks was 2200. The average time point predicted by all the 18 experts who answered this question is 2099.

However, in 2022, Elon Musk also expressed his views on realizing AGI in 2029. He said on Twitter, "2029 feels like a pivotal year. I’d be surprised if we don’t have AGI by then. (I feel that 2029 is a crucial year. I would be surprised if we didn’t have AGI then) "

In this regard, Gary Marcus, a well-known AI scholar, put forward five criteria to test whether AGI is realized, including: understanding movies, reading novels, being a chef, reliably carrying more than 10,000 lines of bug-free code according to natural language specifications or through interaction with non-professional users, and arbitrarily extracting proofs from mathematical literature written in natural language and converting them into symbol forms suitable for symbol verification.

Now it seems that the general big model represented by ChatGPT seems to have taken a big step towards AGI. The task of reading novels and movies seems to be just around the corner. In this regard, Professor Michael Wooldridge believes that at present, it is still difficult for human beings to achieve AGI in 2029.

Tiger sniffing: AI experts like AlphaGo have defeated human beings, but their abilities have great limitations in practical application. Today’s general big model seems to be breaking this situation. What do you think of the future development of expert AI and AGI?

Michael Wooldridge:Symbolic artificial intelligence is a mode of early artificial intelligence, that is, assuming that "intelligence" is a question about "knowledge", if you want an intelligent system, you just need to give it enough knowledge.

This model is equivalent to modeling people’s "thinking", which led the development of artificial intelligence from 1950s to the end of 1980s, and eventually evolved into an "expert system". If you want the artificial intelligence system to do something, such as translating English into Chinese, you need to master the professional knowledge of human translators first, and then use the programming language to transfer this knowledge to the computer.

This method has great limitations,He can’t solve the problem related to "perception". Perception refers to your ability to understand the world around you and explain things around you.For example, I am looking at the computer screen now. There is a bookshelf and a lamp next to me. My human intelligence can understand these things and environments, and can also describe them. However, it is very difficult to get the computer to carry out this process. This is the limitation of symbolic artificial intelligence, which performs well on the problem of knowledge accumulation, but not well on the problem of understanding.

AI recognizes cats as dogs.

Another method is artificial intelligence based on mental model. If you look at the animal’s brain or nervous system under a microscope, you will find a large number of neurons interconnected. Inspired by this huge network and neural structure, researchers tried to model the structure in the animal brain and designed a neural network similar to the animal brain. In this process, we are not modeling thinking, but modeling the brain.

Symbolic artificial intelligence of "modeling thinking" and neural network of "modeling brain" are two main artificial intelligence modes. With the support of today’s big data and computing power, the development speed of neural network is faster, and ChatGPT of OpenAI is a typical example of neural network.

The success of ChatGPT has further enhanced people’s expectations for deep neural networks, and some people even think that AGI is coming. Indeed, AGI is the goal of many artificial intelligence researchers, but I think we still have a long way to go.Although ChatGPT has a strong general ability when it comes to language issues, it is not AGI, it does not exist in the real world, and it cannot understand our world.

For example, if you start a conversation with ChatGPT now, you will go on vacation after saying one sentence. When you come back from a week’s trip, ChatGPT is still waiting patiently for you to enter the next content. It won’t realize that time has passed or what changes have taken place in the world.

Tiger Sniff: Do you think the prediction of realizing AGI in 2029 will come true?

Michael Wooldridge:Although ChatGPT can be regarded as a part of general AI to some extent, it is not the answer to building AGI. It is just a software combination that is built and optimized to perform a specific, narrow-minded task. We need more research and technological progress to realize AGI.

I am skeptical about the idea of realizing AGI in 2029. The basis of human intelligence is "being able to live in the material world and social world". For example, I can feel my coffee cup with my hands, I can have breakfast, and I can interact with anyone. But unfortunately, AI not only can’t do this, but also can’t understand the meaning of any of them. AGI has a long way to go before AI can perceive the real world.

Although the computer’s perception and understanding ability is limited, it still learns from experience and becomes an assistant to human decision-making. At present, as long as AI can solve problems like a "human assistant", what’s the point of arguing whether a computer system can "perceive and understand"?

We will eventually see a world built entirely by AI.

From driverless cars to face recognition cameras, from AI painting and AI digital people to AI writing codes and papers, it won’t take long. As long as it involves technical fields, whether it is education, science, industry, medical care or art, every industry will see the figure of artificial intelligence.

When talking about whether ChatGPT is often used, Professor Wooldridge said that ChatGPT is part of his research, so it will definitely be used frequently. However, in the process of using it, he found that ChatGPT is really a good helper for basic work and can save a lot of time in many repetitive tasks.

Tiger Sniff: Do you use ChatGPT at work? What do you think of ChatGPT Plus’s subscription mode?

Michael Wooldridge:I often use ChatGPT. I think in the next few years, ChatGPT and the general macro model may emerge thousands of different uses, and even gradually become general tools, just like web browsers and email clients.

I am also a subscriber of ChatGPT Plus. But for the price of $25, I think different people have different opinions. Every user will know whether ChatGPT is suitable for them and whether it is necessary to pay for the enhanced version only after trying it in person. For some people, they may just find it interesting, but they prefer to do things by themselves at work. For me, I find it very useful and can handle a lot of repetitive desk work. However, at present, I regard it more as part of my research.

Tiger sniffing: A new PaaS business model with big model capability as the core is being formed in today’s AI market. OpenAI’s GPT-3 gave birth to Jasper, while ChatGPT attracted Buzzfeed. Do you think a new AI ecosystem will be formed around the general big model?

Michael Wooldridge:ChatGPT has a lot of innovations at the application level, and it may soon usher in a "big explosion" of creativity.I think in a year or two, ChatGPT and similar applications will land on a large scale.Complete simple repetitive copywriting work such as text proofreading, sentence polishing, induction and summary in commercial software.

In addition, in multimodal artificial intelligence, we may see more new application scenarios. For example, a large language model combined with image recognition and image generation may play a role in the AR field. Based on the understanding of video content of large model, AI can be used to quickly generate summaries for videos and TV dramas. However, the commercialization of multimodal scenes may take some time.But we will eventually see all kinds of content generated by AI, even virtual worlds created entirely by AI.

Tiger Sniff: What conditions do you think are needed to build a company like OpenAI from scratch?

Michael Wooldridge:I think it is very difficult to start a company like OpenAI from scratch. First of all, you need huge computing resources, purchase tens of thousands of expensive top-level GPUs, and set up a supercomputer dedicated to AI. The electricity bill alone may be costly. You can also choose cloud services, but the current price of cloud computing is not cheap. Therefore, it may cost millions of dollars to train AI every time, and it needs to run for several months or even longer.

In addition, a huge amount of data is needed, which may be the data of the whole internet. How to obtain these data is also a difficult problem. Data and computing power are only the foundation, and more importantly, it is necessary to gather a group of highly sophisticated AI R&D talents.

Tiger Sniff: Which company is more powerful in AI research and development? What do you think of the technical differences between countries in AI research and development?

Michael Wooldridge:The players on this track may include internet companies, research institutions, and perhaps the government, but they are not public. At present, there are not many players who have publicly announced that they have the strength of big models, and even one hand can count them. Large technology companies are currently developing their own large-scale language models, and their technologies are relatively advanced.

So I don’t want to judge who is stronger,I don’t think there is obvious comparability between the models. The difference between them mainly lies in the rhythm of entering the market and the number of users.OpenAI’s technology is not necessarily the most advanced, but they are one year ahead in marketization, and this year’s advantage has accumulated hundreds of millions of users for him, which also makes him far ahead in user data feedback.

At present, the United States has always dominated the field of artificial intelligence. Whether it is Google or Microsoft, or even DeepMind, which was founded in the United Kingdom, now belongs to the American Alphabet (Google’s parent company).

However, in the past 40 years, China’s development in the field of AI has also been quite rapid.In the AAAI Conference (american association for artificial intelligence Conference) in 1980, there was only one paper from Hongkong, China.But today, the number of papers from China is equivalent to that from the United States.

Of course, Britain also has excellent artificial intelligence teams, but we don’t have the scale of China. We are a relatively small country, but we definitely have a world-leading research team.

This is an interesting era, and many countries have strong artificial intelligence teams.

Deep learning has entered a bottleneck.

When people discuss whether ChatGPT can replace search engines, many people think that ChatGPT’s data only covers before 2021, so it can’t get real-time data, so it can’t be competent for search tasks. But some people think that,In fact, the content of our daily search is, to a large extent, the existing knowledge before 2021. Even if the amount of data generated after that is large, the actual use demand is not high.

In fact, the amount of data used by ChatGPT is very large. Its predecessor GPT-2 model is pre-trained on 40GB of text data, while GPT-3 model is pre-trained on 45TB of text data. These pre-training data sets include various types of texts, such as news articles, novels, social media posts, etc. The large model can learn language knowledge in different fields and styles. Many practices have proved that ChatGPT is still a "doctor" who knows astronomy above and geography below, even with data before 2021.

This has also caused people to worry about the data of large-scale model training. When we want to train a larger model than ChatGPT, is the data of our world enough?futureWill the Internet be flooded with data generated by AI, thus forming a data "snake" in the process of AI training?

Ouroborosaurus is considered as "meaning infinity"

Tiger sniffing: You mentioned in your book that neural network is the most dazzling technology in machine learning. Nowadays, neural network leads us to keep moving forward in algorithms, data, especially computing power. With the progress of technology, have you seen the bottleneck of neural network development?

Michael Wooldridge:I think neural networks are facing three main challenges at present. The first is data. Tools like ChatGPT are built from a large number of corpus data, many of which come from the Internet. If you want to build a system 10 times larger than ChatGPT, you may need 10 times the amount of data.But is there so much data in our world? Where do these data come from? How to create these data?

For example, when we train a large language model, we have a lot of English data and Chinese data. However, when we want to train small languages, for example, in a small country with a population of less than 1 million like Iceland, their language data is much smaller, which will lead to the problem of insufficient data.

At the same time, when such a powerful generative AI as ChatGPT is applied on a large scale, a worrying phenomenon may occur. A lot of data on the Internet in the future may be generated by AI.When we need to use Internet data to train the next generation of AI tools, we may use data created by AI.

The next question is about computing power. If you want to train a system that is 10 times bigger than ChatGPT, you need 10 times of computing power resources.In the process of training and use, it will consume a lot of energy and produce a lot of carbon dioxide.This is also a widespread concern.

The third major challenge involves scientific progress, and we need basic scientific progress to promote the development of this technology.Just increasing data and computing resources can really push us further in the research and development of artificial intelligence, but this is not as good as the progress brought by scientific innovation. Just like learning to use fire or inventing a computer, we can really make a qualitative leap in human progress. In terms of scientific innovation, the main challenge facing deep learning in the future is how to develop more efficient neural networks.

In addition to the above three challenges, AI needs to be "interpretable". At present, human beings can’t fully understand the logic behind neural networks, and the calculation process of many problems is hidden in the "black box" of AI.Although neural networks have been able to give good answers, we don’t really understand why they give these answers.This not only hinders the research and development of neural networks, but also makes it impossible for humans to fully believe the answers provided by AI. This also includes the robustness of AI, and to use AI in this way, we need to ensure that the neural network will not collapse and get out of control in an unpredictable way.

Although the development bottleneck is in front of us, I don’t think we will see the subversion of neural networks in the short term.We don’t even know how it works yet, so it is still far from subversion.But I don’t think neural network is the answer of artificial intelligence. I think it is only one component of "complete artificial intelligence", and there must be other components, but we don’t know what they are yet.

Tiger sniffing: If computing power is one of the important factors in the development of AI, what innovative research have you seen in the research and development of AI chips?

Michael Wooldridge:Computing power is likely to be a bottleneck in the development of AI technology in the future. The energy efficiency ratio of the human brain is very high. The power of the human brain when thinking is only 20W, which is equivalent to the energy consumption of a light bulb. Compared with computers, such energy consumption can be said to be minimal.

There is a huge natural gap between AI system and natural intelligence, which needs a lot of computing power and data resources. Humans can learn more efficiently,But this "light bulb" of human beings is always only 20W, which is not a very bright light bulb.

Therefore, the challenge we face is how to make neural networks and machine learning technologies (such as ChatGPT) more efficient. At present, no matter from the point of view of software or hardware, we don’t know how to make neural network as efficient as human brain in learning, and there is still a long way to go in this regard.

When the system talks to the system directly.

Multi-agent system is an important branch of AI field, which refers to a system composed of multiple agents. These agents can interact, cooperate or compete with each other to achieve a certain goal. In multi-agent system, each agent has its own knowledge, ability and behavior, and can complete the task by communicating and cooperating with other agents.

Multi-agent system has applications in many fields, such as robot control, intelligent transportation system, power system management and so on. Its advantage is that it can realize distributed decision-making and task allocation, and improve the efficiency and robustness of the system.

Nowadays, with the blessing of AI big model, multi-agent systems and LLM in many scenarios can try to combine applications, thus greatly expanding the boundaries of AI capabilities.

Tiger sniffing: What are the points that can be combined with the AI big model and multi-agent system of the current fire?

Michael Wooldridge:My research focuses on "what happens when artificial intelligence systems communicate with each other". Most people have smartphones and AI assistants for smartphones, such as Siri, Alexa or Cortana, which we call "agents".

For example, when I want to reserve a seat in a restaurant, I will call the restaurant directly. But in the near future, Siri or other intelligent assistants can help me complete this task. Siri will call the restaurant and make a reservation on my behalf. And the idea of multi-agent system is,Why can’t Siri communicate directly with another Siri?Why not let these AI programs communicate with each other? Multi-agent system focuses on the problems involved when these AI programs communicate with each other.

The combination of multi-agent system and large model is the project we are studying. In my opinion, there is a very interesting work to be done in building a multi-agent+large language model. Can we gain higher intelligence by making large language models communicate with each other? I think this is a very interesting challenge.

For example, we need to make an appointment for a meeting now. You and I both use Siri to communicate, but you like meetings in the morning and I like meetings in the afternoon.When there is a dispute between us, how can Siri, representing you and me, work together to solve this problem?Will they negotiate? When AI not only talks to people, but also talks to other AI systems, many new problems will arise. This is the field I am studying, and I believe that multi-agent system is the future direction.

Another interesting question about multi-agents and large language models is, if AI systems only communicate with each other, do they not need human language? Can we design more effective languages for these AI systems?

However, this will lead to other problems, and we need to formulate rules for the exchange of these agents and AI programs.How should human beings?Managing an artificial intelligence society composed of AI?

Siri’s question and answer

AI can’t go to jail instead of human beings.

Michael Faraday, a British scientist, invented the electric motor in 1831, and he didn’t expect the electric chair as a torture device. Karl Benz, who obtained the automobile patent in 1886, could not have predicted that his invention would cause millions of deaths in the next century. Artificial intelligence is a universal technology: its application is only limited by our imagination.

While artificial intelligence is developing by leaps and bounds, we also need to pay attention to the potential risks and challenges that artificial intelligence may bring, such as data privacy and job loss. Therefore, while promoting the development of artificial intelligence technology, we also need to carefully consider its social and ethical impact and take corresponding measures.

If we can really build AI with human intelligence and ability, should they be regarded as equal to human beings? Should they have their own rights and freedoms? These problems need our serious consideration and discussion.

Tiger sniffing: The Chinese Internet has an interesting point: "AI can never engage in accounting or auditing. Because AI can’t go to jail. " AIGC also has such problems in copyright. AI can easily copy the painting and writing styles of human beings, and at the same time, the creation made by human beings using AI also has the problem of unclear ownership. So what do you think of the legal and moral risks of artificial intelligence?

Michael Wooldridge:The idea that AI can’t go to jail is wonderful. Some people think that AI can be their "moral agent" and be responsible for their actions. However, this idea obviously misinterprets the definition of "right and wrong" by human beings. Instead of thinking about how to create "morally responsible" AI, we should study AI in a responsible way.

AI itself cannot be responsible. Once something goes wrong with AI, those who own AI, build AI and deploy AI will be responsible. If the AI they use violates the law, or they use AI for crimes, then it must be human beings who should be sent to prison.

In addition, ChatGPT needs to strengthen supervision in privacy protection. If ChatGPT has collected information about the whole Internet, then he must have read information about each of us. For example, my social media, my books, my papers, comments made by others on social media, and even deleted information. AI may also be able to paint a portrait of everyone based on this information, thus further infringing or hurting our privacy.

At present, there are a lot of legal discussions about artificial intelligence, not just for ChatGPT. The legal issues of artificial intelligence have always existed and become increasingly important, but at present, all sectors of society are still discussing and exploring this.

I think ChatGPT or other AI technologies will become more and more common in the next few years. However, I also think we need to use it carefully to ensure that we will not lose key human skills, such as reading and writing. AI can undoubtedly help human beings to improve production efficiency and quality of life, but it cannot completely replace human thinking and creativity.

People who are changing and want to change the world are all there. Tiger sniffing APP